The EU AI Act, effective August 2024, regulates AI systems based on risk levels: Unacceptable, High, Limited, and Minimal Risk. It applies to U.S. companies if their AI systems or outputs are used in the EU, even without a physical presence there. Non-compliance can incur fines up to €35 million or 7% of global revenue.

Key Points:

-

Who Needs to Comply?

- Companies marketing AI in the EU or whose AI impacts EU individuals/businesses.

- Firms processing EU residents' data or integrating AI into products sold in the EU.

- Businesses using AI in HR for EU employees (e.g., resume screening).

-

Risk Categories:

- Unacceptable Risk: Banned systems like social scoring or real-time biometric ID.

- High Risk: AI affecting safety, rights, or critical sectors (e.g., credit scoring, medical devices).

- Limited Risk: AI with potential for manipulation (e.g., chatbots, deepfakes).

- Minimal Risk: Low-impact AI (e.g., spam filters) with no mandatory obligations.

-

Compliance Deadlines:

- February 2, 2025: Prohibited practices banned.

- August 2, 2026: Full compliance required for high-risk systems.

-

What’s Required?

- Providers must ensure risk management, data governance, and technical documentation.

- Deployers are responsible for correct implementation, human oversight, and monitoring.

To prepare, U.S. companies should inventory AI systems, classify them by risk, and address compliance gaps. The EU AI Act is shaping global AI regulation, much like GDPR did for data privacy.

Does the EU AI Act Apply to Your Company?

Criteria That Trigger Compliance

The EU AI Act casts a wide net, extending its reach far beyond Europe itself. If you're a U.S.-based company, you may still need to comply with the Act under these circumstances:

- You market AI systems in the EU or deploy them there. This includes offering AI-powered tools to customers within the EU.

- Your AI system's outputs are used in the EU. If the predictions, recommendations, or decisions made by your AI affect EU individuals or businesses, you're subject to the Act.

- You process data about EU residents. Whether through analysis or other data-handling practices, if your AI involves information about people in the EU, compliance is required.

- Your AI technology is part of products sold by EU companies. If your AI components are integrated into products marketed by European partners, the Act’s supply chain rules apply to you.

- You use AI in HR functions for EU employees. This includes activities like screening resumes of EU candidates or assessing the performance of employees based in Europe, regardless of where your systems or HR teams are located.

These scenarios highlight how the Act applies across borders, making it essential to understand its implications for your business.

Industries and Use Cases Most Likely Affected

Certain industries face greater challenges under the EU AI Act due to their reliance on AI systems deemed high-risk. Here are some examples:

- Healthtech companies using AI for tasks like diagnostics, robotic surgeries, or in medical devices fall under the high-risk category.

- Fintech firms employing AI for credit scoring, fraud detection, or underwriting face stringent regulations because of the potential consequences for financial access.

- SaaS and HR technology providers offering AI tools for resume screening, candidate ranking, or productivity analytics are also classified as high-risk systems.

- EdTech platforms that use AI for scoring exams or evaluating student applications must adhere to strict accuracy and fairness standards.

The Act’s focus isn’t limited to where your company operates - it’s about whether your AI systems interact with the EU market. As KPMG puts it, "The EU AI Act is applicable to many U.S. companies, potentially even including those with no physical EU presence".

sbb-itb-ec1727d

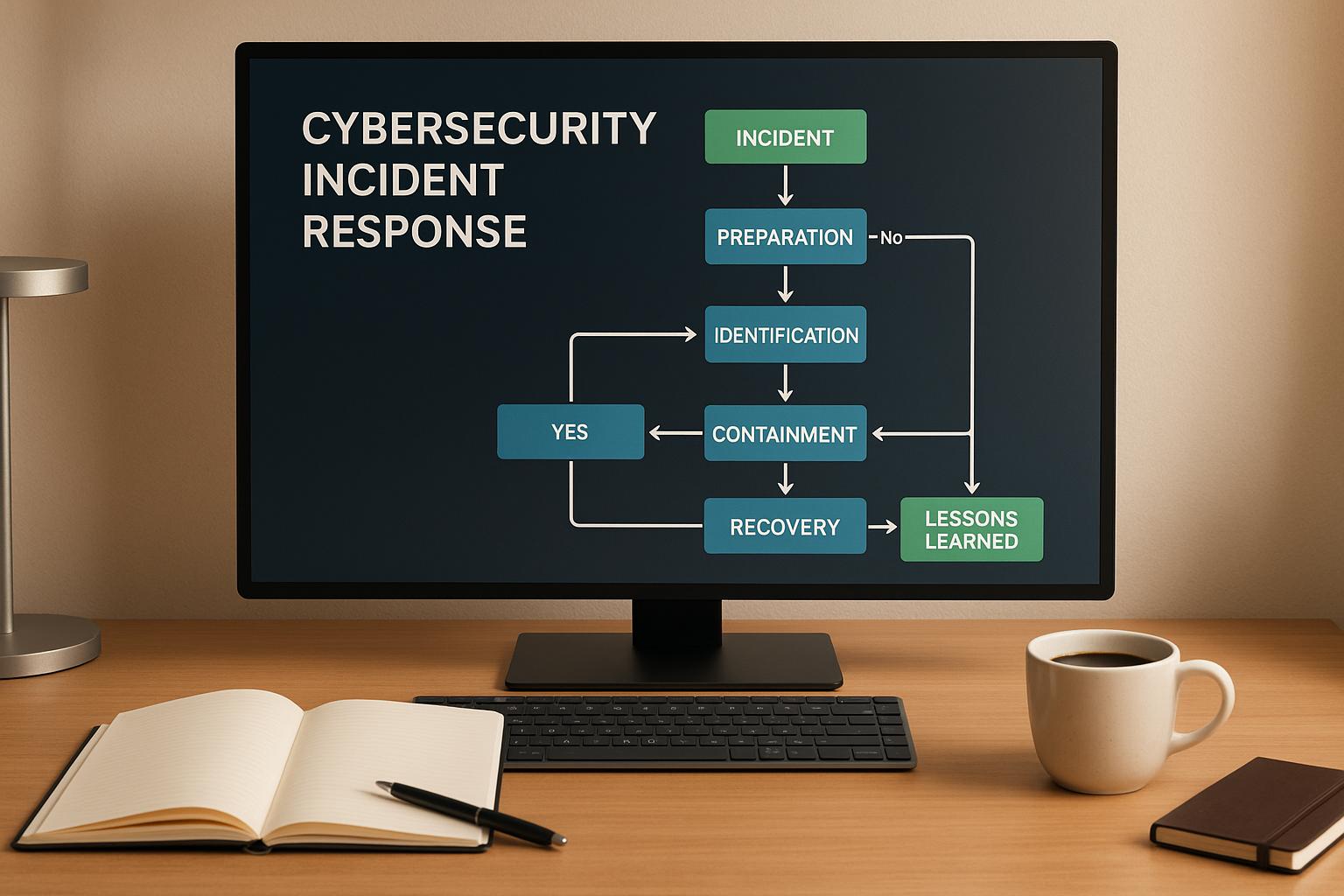

The 4 Risk Categories Under the EU AI Act

EU AI Act Risk Categories and Compliance Requirements

How the Risk Tiers Work

For U.S. companies looking to comply with EU regulations, understanding the risk tiers outlined in the EU AI Act is essential. These tiers categorize AI systems based on their potential to harm safety or infringe on rights, with each tier carrying specific rules - or in some cases, outright bans.

At the highest level is Unacceptable Risk, which includes AI systems deemed incompatible with EU values. These are banned outright. Examples include social scoring systems, real-time biometric identification in public areas, and emotion recognition tools used in schools or workplaces. Enforcement for this category began in February 2025.

The next tier is High-Risk, covering AI systems that could significantly affect health, safety, or fundamental rights. This includes tools like recruitment software that screens resumes, credit scoring algorithms, and AI integrated into medical devices. Initially, the European Commission estimated that 5–15% of AI applications would fall under this category. However, a 2023 study examining 106 enterprise AI systems found that 18% were classified as high-risk. These systems face strict compliance requirements, including risk management, data governance, detailed technical documentation, and mandatory human oversight.

Limited Risk applies to AI systems that might manipulate or deceive users. Examples include chatbots and deepfake generators. Transparency is key here - users must be informed when interacting with AI, and deepfakes must be clearly labeled.

Finally, Minimal Risk represents the majority of AI applications, such as spam filters and AI-powered video games. These systems have no mandatory compliance requirements under the Act, though companies are encouraged to follow voluntary codes of conduct.

"The AI Act is the first-ever legal framework on AI, which addresses the risks of AI and positions Europe to play a leading role globally."

– European Commission

The table below provides a clear comparison of these risk categories.

Risk Tier Comparison Table

| Risk Category | Definition | Examples | Compliance Requirements |

|---|---|---|---|

| Unacceptable | Poses severe safety and rights risks | Social scoring, real-time biometric ID in public spaces, emotion recognition in schools/workplaces | Prohibited from the EU market |

| High-Risk | Significant impact on health, safety, or rights | AI in critical infrastructure, recruitment (resume screening), credit scoring, medical devices | Requires measures like risk management, data governance, technical documentation, and human oversight |

| Limited | Risk of manipulation or deceit | Chatbots, deepfakes | Must ensure transparency: users must know they are interacting with AI, and deepfakes must be labeled |

| Minimal | Little to no risk | Spam filters, AI-enabled video games, inventory management | No mandatory obligations; voluntary codes of conduct encouraged |

Violating these rules can lead to steep penalties. Using a prohibited AI system could result in fines of up to $43 million or 7% of global turnover. For high-risk systems, failing to meet data governance requirements could cost up to $22 million or 4% of global turnover.

What U.S. Companies Must Do to Comply

Requirements for AI System Providers

If your company develops or sells AI systems in the EU market, you fall into the category of a Provider. For high-risk systems, you’ll need to establish a continuous risk management system to identify and address potential harms.

Maintaining strict data governance is crucial. This means using high-quality, diverse datasets for training and testing, and keeping detailed records of data sources, preprocessing steps, and validation processes. Additionally, you’ll need to prepare thorough technical documentation that outlines system design, training methods, evaluation processes, and performance metrics, as these will be subject to regulatory review.

Another key requirement is conducting mandatory conformity assessments for high-risk systems. Your AI must also be designed with human oversight capabilities, allowing trained personnel to step in or override decisions when necessary. As KPMG points out, "The chief information security officer (CISO) is a key senior role that will be most affected by the EU AI Act, as it will impact how businesses develop and deploy AI technologies and secure their data".

For systems with limited risks - like chatbots or deepfakes - transparency is the priority. You must clearly inform users they’re interacting with AI and label any AI-generated content. Noncompliance with these transparency rules could lead to steep penalties, including fines of up to $22 million or 4% of global turnover.

Requirements for AI System Deployers

While Providers focus on building and documenting AI systems, Deployers are responsible for ensuring their proper use in real-world settings. If your company deploys AI systems, your role involves implementation and ongoing monitoring to ensure responsible usage.

Human oversight plays a critical role. Trained personnel must continuously monitor the system and have the authority to intervene when necessary. Ogletree Deakins highlights this requirement, stating, "Employers will be required to ensure human oversight, worker notice, and logging processes are operational by [August 2026]".

Monitoring doesn’t stop there. You’ll need to track real-world performance to identify and address any adverse impacts, such as discrimination, and document these issues promptly. For high-risk systems, you’re required to maintain automated logs for at least six months. If you’re deploying AI in HR-related tasks - like résumé screening or performance reviews - you may also need to conduct a Fundamental Rights Impact Assessment (FRIA) before the system goes live.

Transparency and accountability are equally important. Notify individuals when AI influences decisions about them, and be prepared for incident reporting. If your AI system malfunctions or causes a serious issue, you must report it to the European AI Office and relevant national authorities. To streamline compliance, update vendor contracts to include clear instructions, technical documentation, and support for incident reporting.

| Feature | Requirements for Providers | Requirements for Deployers |

|---|---|---|

| Primary Focus | System design, safety, and documentation | Proper implementation, monitoring, and usage |

| Key Tasks | Conformity assessments, technical documentation, and risk management | Human oversight, worker notification, and impact monitoring |

| Documentation | Must provide "Instructions for Use" to the deployer | Must maintain logs and record-keeping of system usage |

| Transparency | Ensure the system is designed to be transparent | Inform users/workers when they are interacting with AI |

How to Prepare for EU AI Act Compliance

Assess Your Current AI Systems and Gaps

Start by creating a detailed inventory of all the AI systems your organization uses, develops, or purchases. This should include everything from internal tools to customer-facing products, as well as any AI integrated into third-party software. It's important to note that even companies without a direct presence in the EU could still be impacted by these regulations.

Once you’ve compiled this inventory, classify each system based on the EU AI Act’s four risk categories: Unacceptable, High, Limited, or Minimal Risk. The European Commission estimates that only 5% to 15% of AI applications will fall under the high-risk category. However, a study by appliedAI revealed that 40% of enterprise AI systems had unclear risk classifications, highlighting the importance of precise categorization.

Next, identify gaps between your current practices and the regulatory requirements. Will Relf, Director of Data at Aztec Group, emphasizes the importance of high-quality data governance:

"The most efficient way to [mitigate AI risk] is to ensure that the data used to train the AI system is itself well-governed and of high quality".

Additionally, ensure that your team is equipped with the necessary knowledge. Article 4 of the Act mandates that staff responsible for AI systems must have adequate training and understanding of the technology. This combination of system assessment, gap analysis, and staff training forms the foundation for effective compliance.

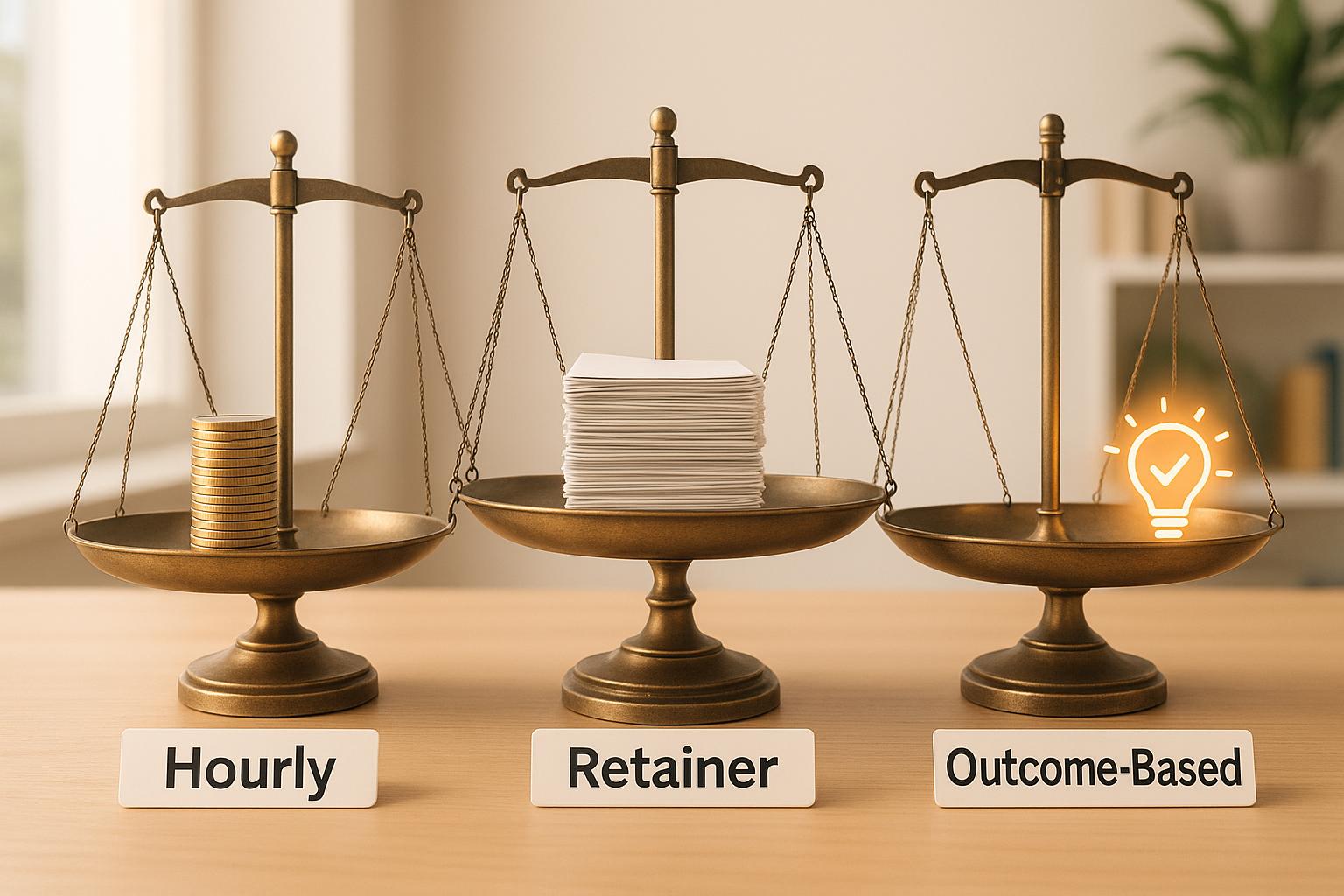

Using Managed Services to Handle Compliance Work

While compliance automation tools can help track tasks and map risks to regulatory controls, they often fall short when it comes to implementing the required actions. Organizations still need skilled support to configure systems, gather evidence, and prepare technical documentation. Managed services can fill this critical gap.

For example, Cycore acts as an embedded compliance team, handling tasks like gap analysis, evidence collection, and audit preparation. This allows your internal team to stay focused on driving business growth. By combining automated tools with expert oversight, managed services ensure your compliance efforts align with both immediate needs and long-term strategic goals.

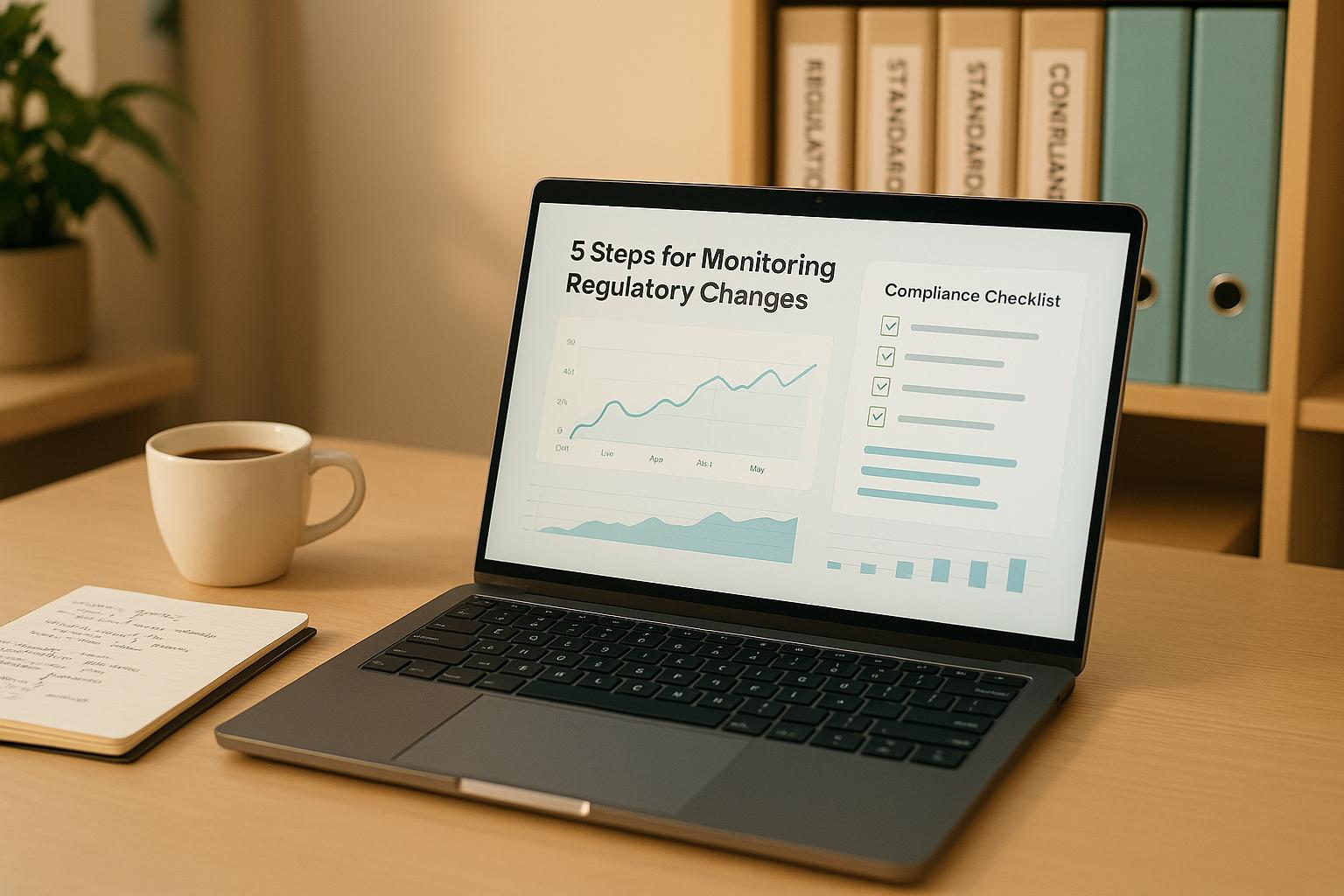

Maintaining Compliance Over Time

Compliance doesn’t end once initial measures are in place - it's an ongoing process. Regular updates to documentation, system performance monitoring, and incident reporting are essential as new requirements and prohibitions come into effect.

Proskauer Rose LLP underscores the importance of continuous monitoring:

"The 2 August 2026 grace period should not exclude a high-risk AI system from any inventory... changes to high-risk AI systems need to be tracked as part of ongoing compliance work".

Even AI systems introduced before the August 2026 deadline must comply immediately if they undergo significant design or architectural changes. By keeping a close eye on system updates and regulatory shifts, you can ensure your organization remains compliant over time.

Conclusion

Main Points to Remember

Here’s a quick recap of the key takeaways from earlier sections:

The EU AI Act applies to U.S. companies that use AI within the European Union. This regulation uses a risk-based framework, categorizing AI systems into four levels of risk. High-risk systems, in particular, face stringent requirements, including detailed technical documentation, human oversight, and ongoing monitoring.

Non-compliance isn’t cheap - violations can lead to fines of up to $35 million or 7% of global revenue. The next big date to keep in mind? August 2, 2026, when full enforcement for high-risk AI systems kicks in.

To prepare, U.S. companies need to act now by cataloging their AI systems, assessing their risk levels, and identifying any gaps in compliance. Article 4 also highlights the importance of ensuring that employees working with AI systems have the necessary knowledge and skills to understand both the benefits and risks of AI. Additionally, if your company operates high-risk AI systems and doesn’t have a physical presence in the EU, you’ll need to appoint an authorized representative within the region.

How Cycore Simplifies EU AI Act Compliance

Navigating these challenges is no small task, but that’s where Cycore steps in. Acting as your dedicated compliance partner, Cycore takes the burden off your team by managing all aspects of EU AI Act compliance, allowing you to focus on growing your business.

Cycore combines AI-driven tools with human expertise. While AI agents handle tasks like evidence collection and gap identification, specialists oversee strategy and risk management. Their comprehensive approach includes conducting gap analyses, implementing necessary controls, compiling technical documentation, and preparing for audits - all for a predictable monthly fee.

This solution saves your engineering and operations teams from spending countless hours on manual compliance work. And as the EU AI Act sets the tone for global AI regulation - similar to how the GDPR reshaped data privacy standards - Cycore ensures you stay ahead of the curve. By demonstrating your commitment to responsible AI practices, you not only meet regulatory expectations but also build stronger trust with customers and investors.

FAQs

How can U.S. companies determine if their AI systems are subject to the EU AI Act?

To figure out if your AI system is subject to the EU AI Act, start by considering whether it is available, used, or has an impact within the EU market. This includes systems that directly affect EU users. Next, determine if your system is categorized as high-risk under the Act. High-risk areas include sectors like critical infrastructure, education, employment, or law enforcement. Lastly, carefully examine the requirements detailed in the EU AI Act to verify its relevance to your system. Pay close attention to the obligations that apply to your specific industry and use case to ensure your system meets the necessary compliance standards.

What should U.S. companies do to comply with the EU AI Act?

To align with the EU AI Act, U.S. companies need to take a few essential steps:

- Determine if the Act applies to you: Start by assessing whether your AI systems are being used or made available to users in the EU. Pay special attention if your systems are considered high-risk, such as those involved in critical infrastructure, hiring processes, credit scoring, or law enforcement.

- Familiarize yourself with the requirements: Dive into the Act’s rules around safety, transparency, and data governance. Pay close attention to obligations for high-risk and general-purpose AI systems, and connect these regulations to your business’s specific applications.

- Develop a compliance plan: Perform a gap analysis to identify where your current practices fall short of the Act’s standards. Create a detailed roadmap to address these gaps, focusing on risk management, documentation, and ongoing monitoring. Make sure you’re ready for conformity assessments and have the required certifications in place before entering the EU market.

- Stay ahead of the curve: Set up internal AI governance by designating clear responsibilities for compliance and keeping technical documentation up to date. Keep an eye on any regulatory changes and consult with experts when necessary.

By following these steps, U.S. companies can position themselves to meet the EU AI Act’s requirements before the phased deadlines kick in by February 2025.

What are the consequences for not complying with the EU AI Act?

Failure to comply with the EU AI Act comes with hefty consequences. Companies violating prohibited AI practices could be hit with fines reaching $37.5 million or 7% of their global annual revenue, whichever amount is greater. Non-compliance with other provisions of the Act can also result in similar severe penalties.

These steep fines underscore the urgency for businesses to fully grasp the regulation and ensure their operations align with its requirements, protecting themselves from both financial setbacks and damage to their reputation.